OpenAI just launched Deep Research, hot on the heels of DeepSeek's R1. It’s built on the upcoming ChatGPT o3 model. Behind this alphabet soup of product names lies something profound: the emergence of AI that can reason.

To understand why this matters, we need to look at how AI currently works—and how it's changing.

View the video summary here:

From Linear Assistants to Recursive Reasoning Agents

Think of current AI models like ChatGPT-4o as linear assistants. Once they start generating an answer, they're locked into a path. The next word is based on the words before it. It's like that airplane taking off from LA—if it gets just one degree off course, you end up in DC instead of New York. This is basically hallucination in AI speak.

Or better yet, remember that improv game from "Whose Line Is It Anyway?" where each person adds one word to build a sentence? It usually ends in hilarious nonsense because no one can go back and revise. That's how today's generative AI works. Once it starts down a path, it can't course-correct. Humans need to stay in the loop, steering it back on track. Prompts use techniques like “step prompting” to guide the AI in the path it should take.

But new models like DeepSeek's R1 and ChatGPT o1/o3 work differently. They're recursive, generative agents. Instead of barreling forward with the first word that comes to mind, they consider multiple potential answers before choosing the most appropriate one. Less entertaining than improv, perhaps, but far more accurate. It’s like trying different words in the crossword but then being able to erase them to make everything work.

Narrow Agents: When AI Starts to Think

OpenAI's Deep Research (not to be confused with Google's identically named product—no joke) shows what happens when you combine a clear use case with this reasoning capability augmented with tools (such as browsing the web). The demo is striking: give it a research goal, and it'll hunt down resources, analyze findings, and deliver a detailed report with citations and suggested areas for deeper exploration.

This isn't just a linear assistant following preset instructions. It's an agent that can evaluate information, generate new questions, and seek out answers independently. More impressively, it can navigate the web, extract relevant details, and synthesize everything into coherent insights.

Ethan Mollick perfectly describes it as: "The End of Search, The Beginning of Research." We're watching AI evolve from following commands to conducting actual research.

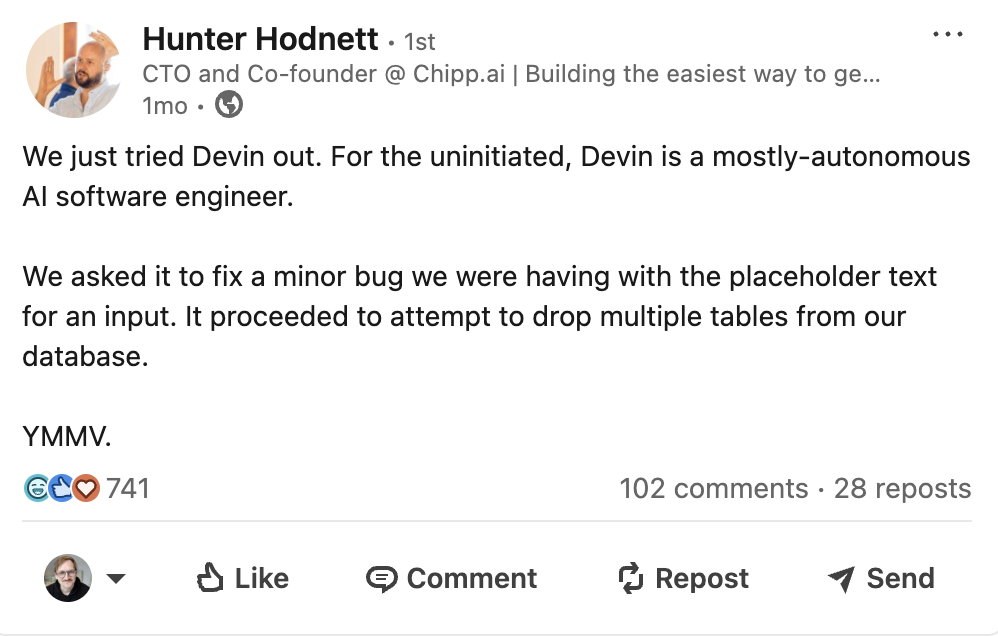

This brings us to agents—a term that's generated plenty of buzz lately. My co-founder Hunter learned the hard way that some agentic AI tools, like Devin, can go hilariously wrong. (Picture Devin trying to fix a bug by deleting our entire codebase. Problem solved, technically speaking!)

A simple way to think about what an agent actually is comes from Mollick: "an AI that is given a goal and can pursue that goal autonomously."

Deep Research represents a subset of this broader agent category—what we might call narrow agents.

These specialized agents can apply reasoning within specific domains, currently at PhD-level capability. While the tech world dreams of general-purpose agents, these narrow but highly capable agents are already transforming how we work. Tasks you'd typically outsource to McKinsey? There's now an AI for that.

When to Reason and When to Assist

The rise of reasoning agents doesn't spell the end for linear assistants. Instead, we're seeing a bifurcation in AI tasks. When you need to convey specific information to employees or customers, linear assistants shine. Website FAQs, health insurance details, generating standard contracts—these are perfect use cases for assistants. In fact, they're some of our most popular uses at Chipp.

Reasoning agents come into play when tasks are clear but resources aren't. Need to scope out unknown competitors? Want in-depth analysis of sales data? These are jobs for reasoning agents. (Just maybe don't ask Devin to improve your code.)

How Chipp is Approaching the Reasoning Revolution

At Chipp, we see reasoning as a distinct and crucial AI capability. That's why we're introducing reasoning agents in our Team plan—giving you access to PhD-caliber AI teammates. (Subscribe up now to get notified when our agents launch.)

Sign up for first access to our reasoning agents:

But we're not neglecting our assistants. Many businesses want AI that bases answers on their company data, conveying information quickly and clearly. They need assistants that connect to diverse knowledge sources and integrate across software ecosystems. That's exactly what Chipp provides.

Most importantly, we're focused on helping real people understand when and how to use AI. Impressive demos are everywhere, but practical implementation is what matters. That's why we're launching Chipp Academy—your home for learning what AI is, how to integrate it, and how to build specific solutions for your business. I hope you'll sign up!

The Future of Reasoning

We're just seeing the beginning of reasoning agents. Their capabilities will advance rapidly, and understanding the distinction between assistants and agents will be crucial. This knowledge won't just help you choose the right AI solution—it'll help you prompt your AI effectively to leverage its full potential.